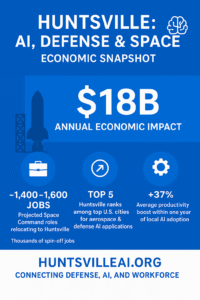

Huntsville is uniquely positioned at the intersection of defense, aerospace, cybersecurity, engineering, and government contracting—exactly where agentic AI will have the most immediate impact.

Agentic AI refers to systems that can carry out tasks autonomously within defined boundaries. In Huntsville, this means real, measurable outcomes: faster cyber threat monitoring, improved engineering simulation, automated compliance workflows, smarter logistics, and research teams that can move at greater speed without increasing headcount. This is not about replacing mission-critical professionals; it is about reducing cognitive overload and improving performance in high-stakes environments.

Understandably, workforce concerns often follow discussions of AI. The reality is more nuanced. While some repetitive tasks will fade, new roles will emerge—particularly for professionals who can supervise AI systems, set clear objectives, validate outputs, and intervene when judgment is required. In 2026, the most valuable workers will not be those who try to compete with AI, but those who can manage it responsibly.

Agentic AI also raises important ethical considerations. Unlike traditional AI that merely suggests, agentic systems can act. This increases the need for accountability, human-in-the-loop oversight, clear operational limits, and transparent decision logging—especially in defense and public-sector contexts. Huntsville has an opportunity not just to adopt these systems, but to lead nationally in how they are governed.

The difference between organizations that benefit from agentic AI and those that struggle will come down to preparation. Panic leads to inaction or misuse. Preparedness leads to resilience, competitiveness, and opportunity—for small contractors, nonprofits, educators, and startups alike.

The question is not whether AI will act with increasing autonomy. The question is who sets the rules, who understands the systems, and who benefits from them.

Respectfully,

Christopher Coleman